Top 7 Data Quality Tools and Software for 2024

-

by Anoop Singh

- 11

It can be tough to manage data manually, and doing so can sometimes lead to errors or inefficiencies. Spreadsheets can get overly complex, and data quality can suffer.

This has become a large enough stumbling block to the success of business intelligence and the Big Data industry that a number of data quality tools have stepped in to help solve the problem.

Let’s discuss seven of the leading solutions that can help you simplify the work of data management so you can actually turn all those cell values into something that can be used for business decisions.

SEE: Get big data certification training with this bundle from TechRepublic Academy.

Top data quality tools comparison

While the core functionality — collecting, normalizing and organizing data — is essentially the same from solution to solution, there’s a lot of wiggle room for value propositions and differentiation. Some teams need a low-code or white-glove solution, while others need deep customization options to better fit the tool to their use case.

What’s more, some tools will focus more narrowly on just one or a few aspects of the overall process.

The individual entries down below will go into more detail regarding what each tool offers, but this table should give you an overview of which ones can cover end-to-end functions and which ones are more limited and focused.

| Free tier | Starting price | Data cleansing | Customizable alerts | Data matching | |

|---|---|---|---|---|---|

| Data Ladder | No | Custom pricing | Yes | Yes | Limited |

| OpenRefine | Yes | None | Limited | Limited | Yes |

| Talend | No | Custom pricing | Yes | Yes | Yes |

| Ataccama | No | Custom pricing | Yes | Yes | Yes |

| Dataedo | No | $18k per year | Limited | Limited | Yes |

| Precisely | No | Custom pricing | Yes | Yes | Yes |

| Informatica | Yes | Custom pricing | Yes | Yes | Yes |

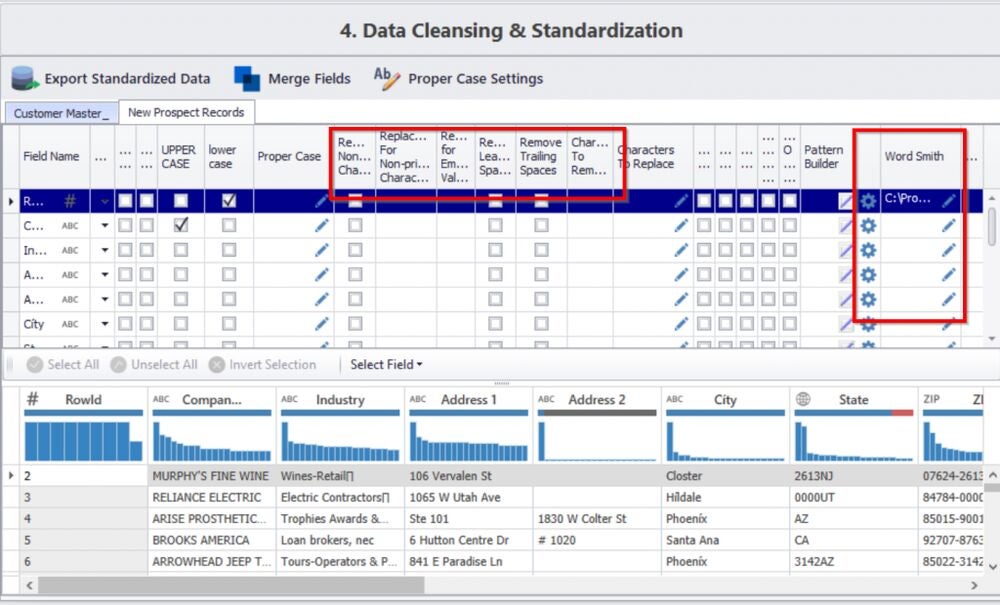

Data Ladder: Best for large datasets

One of the most difficult challenges you can throw at a data management team is reconciling massive datasets from disparate sources. Just like it takes industrial-grade equipment to process industrial quantities of raw material, it requires powerful systems to parse and process the volume and variety of data that larger businesses generate in the course of normal operations these days.

Data Ladder is among the best tools for addressing these kinds of concerns.

Built in part to facilitate address verification on a colossal scale, Data Ladder covers the entire data quality management (DQM) lifecycle, from importing to deduplication and even merge-and-purge survivorship automation. It can even help with “fuzzy logic”—matching data based on common abbreviations, misspellings and other typical human-entry errors.

Although Data Ladder’s data quality solutions are user-friendly and require minimal training, some advanced features can be tricky to use. And there have been a few reports among customer reviews of some advanced features lacking the needed documentation to put to good use.

For more information, read the full Data Ladder review.

Pricing

- Customized pricing.

- No free tier or free trial option.

Features

- Import data from a variety of sources, including local files, cloud storage, APIs and relational databases.

- Normalize and organize data, including profiling, cleansing, matching, deduplication and more.

- A 360-degree view of data through industry-leading data profiling tools.

- Powerful merge and purge functions, completely customizable to your use case.

Pros and cons

| Pros | Cons |

|---|---|

|

|

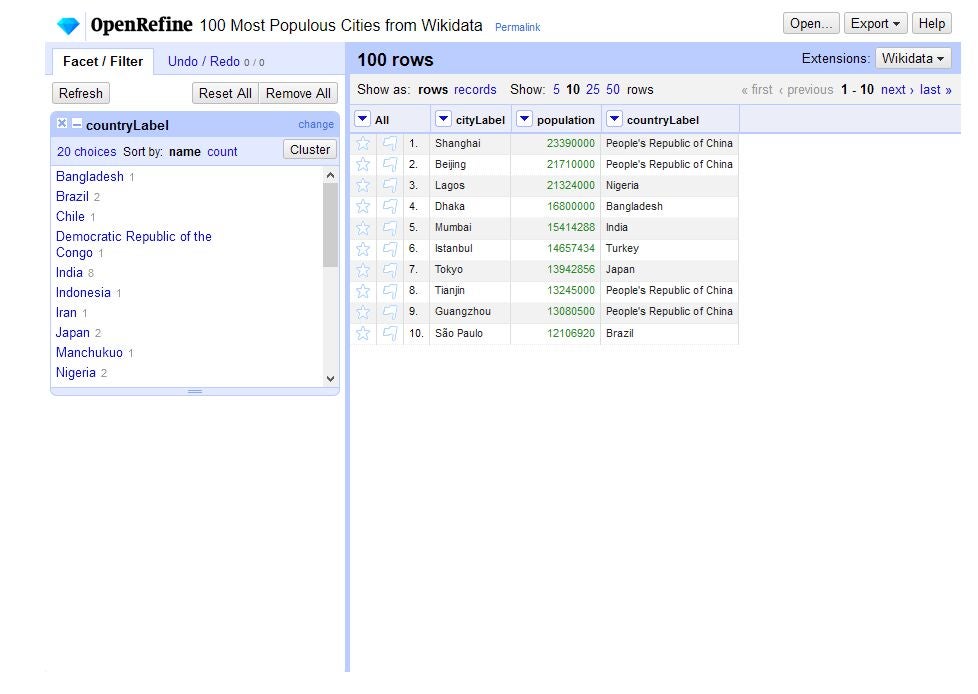

OpenRefine: Best free and open-source solution

Not every organization is in the market for a big-budget technology solution. In fact, there are a number of use cases where even nominal costs can be a dealbreaker. And, unfortunately, free versions of data management software aren’t terribly common.

OpenRefine is a remarkable attempt to fill this vacancy and offer professional data cleansing without charging a single penny from those who use it.

Originally a tool developed by Google, it was discontinued by the tech giant and left in the hands of a community of volunteers. Rebranded as OpenRefine to reflect its new open-source nature, it’s offered for free to anyone who wants to use it — businesses, nonprofits, journalists, librarians and just about anyone who’s sick of using spreadsheets.

Because the tool is free, there are a few details you should be aware of. For one, the interface may look a bit “outdated” to some and may require a bit of tinkering to figure out how to use it effectively. Most notably, though, is the way it’s designed for local implementation. The program can be downloaded and run offline, and it’s actually meant to be.

You can host the tool elsewhere, but that presents some potential security concerns, and OpenRefine makes this clear with some pretty direct disclaimers. Either way, you’ll likely experience slower performance than with other solutions in this list, particularly when trying to digest larger datasets.

Pricing

- As an open-source tool, OpenRefine is completely free to use.

Features

- Powerful heuristics allow users to fix data inconsistencies by clustering or merging similar data values.

- Data reconciliation to match the dataset to external databases.

- Faceting feature to drill through large datasets as well as the ability to apply various filters to the dataset.

Pros and cons

| Pros | Cons |

|---|---|

|

|

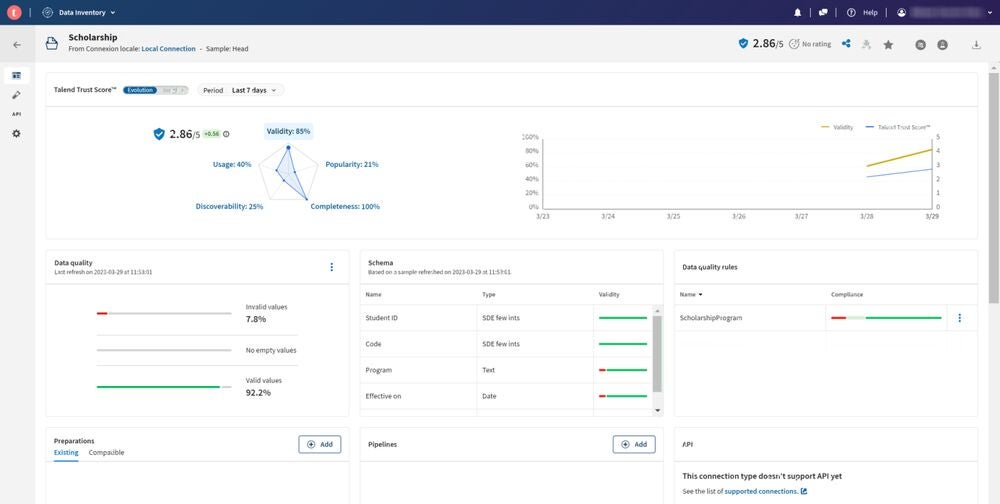

Talend: Best for scalability

One of Talend’s main value propositions is the accessibility of the technology. Built as a comprehensive no-code framework, it’s designed to allow those with minimal data and software engineering chops to actually put something together themselves. Because hey, not everyone who needs accurate data is a data scientist.

The platform is both intuitive (including drag-and-drop interfaces for designing pipelines), and robust enough to accommodate a wide range of data management experience levels. And it can be hosted on-premises, in the cloud or via hybrid deployment.

One downside, though, is that pesky issue of large datasets. Those who use Talend may see slowdowns and other performance issues when trying to process high volumes of data all at once. So while it’s an excellent way to connect the entire organization to a single source of truth rather quickly, you’ll have to be careful when batching the queries and functions.

Explore our in-depth review of Talend Open Studio.

Pricing

- Customized pricing.

- No free tier.

Features

- Real-time data profiling and data masking.

- Ability to perform detailed data profiling, including identification of data patterns and dependencies.

- Variety of prebuilt data quality rules for common scenarios, and drag-and-drop interfaces for hassle-free pipeline design.

- Advanced algorithms for data matching, extensive API and integration tools, and more.

Pros and cons

| Pros | Cons |

|---|---|

|

|

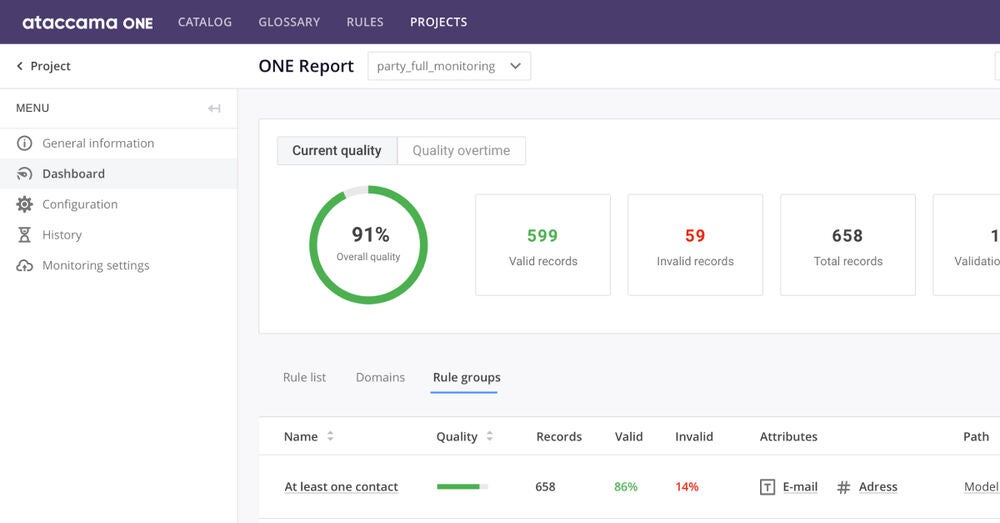

Ataccama: Best for AI capabilities

If not for Ataccama’s more recently released AI capabilities, we’d have it listed here as “best for compliance and governance.” Privacy, access management, monitoring and other critical InfoSec concerns are all fully enabled and supported by the platform.

That said, its new AI features are noteworthy enough to warrant a change in title. In fact, many of the core security features are now likewise empowered by learning models. You can even use Ataccama to train your own models unique to your use case.

Put simply, Ataccama makes it incredibly easy to supercharge small data teams, and enable them to accomplish goals typically requiring much larger ones.

SEE: Here’s how Ataccama ONE compares to Informatica Data Quality.

Pricing

Features

- Extensive governance controls, maximizing data team’s efforts to ensure compliance.

- Variety of built-in data quality rules and standards.

- AI-powered functions for data cleansing, implementing governance policies, reporting and more.

- Ability to deploy on cloud, on-premises or in a hybrid arrangement.

Pros and cons

| Pros | Cons |

|---|---|

|

|

Features

- Variety of tools for the identification of data quality issues that can be used to gather feedback on data quality from other data users.

- Discover and document data relationships with entity relationship diagrams.

- Collect and scan metadata from multiple sources to automatically build a data dictionary.

- FK Relationship feature to minimize data inconsistencies and errors.

Pros and cons

| Pros | Cons |

|---|---|

|

|

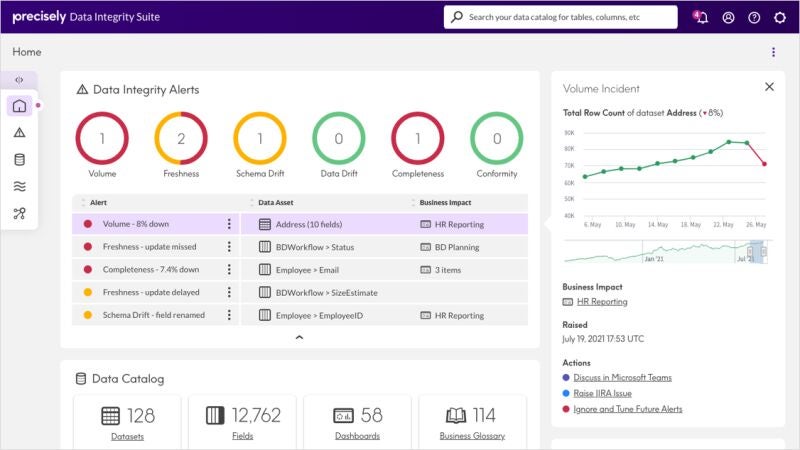

Precisely: Best for data enrichment

Another list entry that features a heavy focus on address and geographical data, Precisely has made a name for itself largely through the data enrichment functions it offers. Precisely Streets, for example, provides a “spatially accurate mapping framework,” while Precisely Boundaries facilitates “continuous global boundary coverage.”

Similar enrichment for points of interest, addresses, demographics and more help the platform stand out as a solution designed to turn static data into your most valuable business asset.

The downside of Precisely is that it can be difficult to use. The complex installation procedures and challenging user interface are often customers’ top complaints with Precisely software. Tech-savvy power users might not find Precisely Trillium challenging to leverage, but less software-literate customers will most likely need structured training.

SEE: Read how Precisely Trillium Quality compares to Ataccama ONE.

Pricing

Features

- Smart data quality management that leverages AI tools and automation features to deliver instant results.

- High-performance data processing for large volumes of data. Faster data processing times help maximize efficiency for data-intensive organizations.

- As the name implies, powerful enrichment tools enable even pindrop-level accuracy for physical addresses (just as one example).

Pros and cons

| Pros | Cons |

|---|---|

|

|

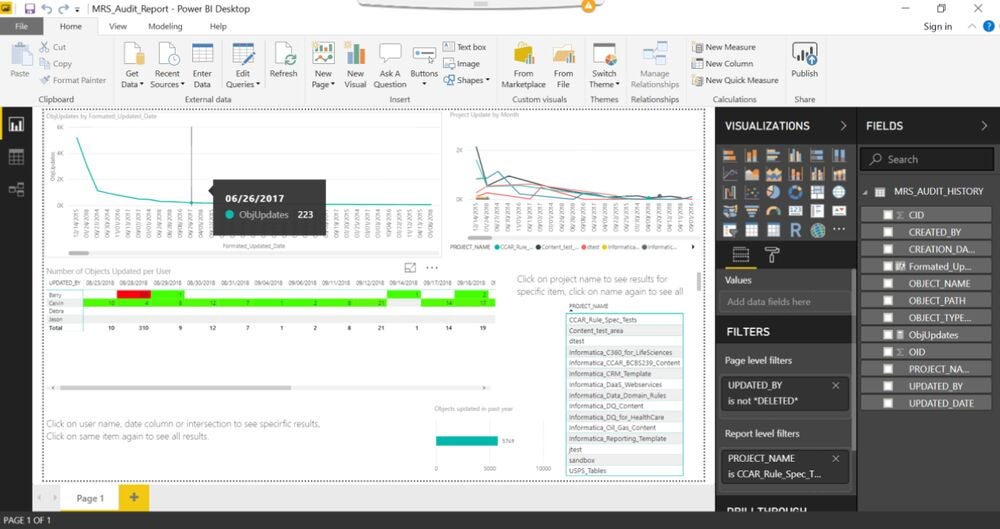

Informatica: Best for data profiling

One of the most foundational features that we’ve all grown accustomed to is search functions. Google has, indeed, rather spoiled us with largely dependable and helpful SERPS.

If you’ve ever gone to a website, tried searching for something specific using their search bar, then cried out in frustration knowing good and well that Google would have accurately identified your search intent and served up the right results, you’ve experienced this problem.

Closely related, and quickly catching up to its predecessor, is voice search. There’s quite a bit of convenience to be had in voice-activated queries — provided it actually works as intended.

Informatica aims to provide the best of the AI assistant experience, but for your own data management efforts. It does this through a combination of extensive data profiling, impressive NLP through the CLAIRE AI and some potent analytics. The end result is a powerful automation suite that can dramatically streamline your efforts to cleanse and optimize your data.

For more information, read the full Informatica Data Quality review.

Pricing

- Customized pricing.

- Free tier available.

Features

- Prebuilt rules and accelerators to automate data quality processes.

- Variety of data monitoring tools, including data iterative analysis to detect and identify data quality issues.

- Role-based capabilities to empower a variety of business users who can play a key role in monitoring and improving data quality.

- AI and machine learning tools to help minimize errors.

Pros and cons

| Pros | Cons |

|---|---|

|

|

Key features of data quality tools

Data profiling

Data profiling allows users to analyze and explore data to understand how it is structured and how it can be used for maximum benefit. This feature can include tools for analyzing data patterns, data dependencies and the ability to define data quality rules.

Data parsing

Data parsing allows the conversion of data from one format to another. A data quality tool uses data parsing for data validation and data cleansing against predefined standards. Another important benefit of data parsing is that it allows for error and anomaly detection. In addition, advanced data parsing features offer automation tools, which are particularly useful for large volumes of data.

Data cleaning and standardization

Data cleaning and standardization help identify incorrect or duplicate data and modify it according to predefined requirements. With this feature, users can ensure data exists in consistent formats across datasets. In addition, data cleaning helps enrich data by filling in missing values from internal or external data sources.

Monitoring and notifications

Monitor data throughout the data lifecycle and notify administrators and management of any issues that need to be addressed. This may include the ability to define data quality KPIs and have access to real-time data quality insights. Some advanced applications allow for customizable alerts.

Frequently asked questions about data quality

What are data quality tools?

Data quality tools are used to monitor and analyze business data, determining if the quality of the data makes it useful enough for business decision-making while also defining how data quality can be improved. This can include gathering data from multiple data sources, such as databases, emails, social media, IoT and data logs, and effectively scrubbing, cleaning, analyzing and managing the data to make it ready for use.

Combing through datasets to find and fix duplicate entries, fix formatting issues and correct errors can use up valuable time and resources. Although data quality can be improved through manual processes, using data quality tools increases the effectiveness, efficiency and reliability of the process.

Why are data quality tools important?

Companies are increasingly taking a data-driven approach to their decision-making. This includes decisions regarding product development, marketing, sales and other functions of the business.

And there is certainly no lack of data available for these decisions. However, the quality of data remains an issue. According to Gartner, poor data quality costs companies $12.9 million on average every year.

One of the advantages of using data for decision-making is that businesses can derive valuable, quantitative insights to achieve positive outcomes such as reduced costs, increased revenue, improved employee productivity, increased customer satisfaction, more effective marketing campaigns and an overall bigger competitive advantage.

The effectiveness of business decisions is directly related to the quality of data, which is why data quality tools are so important. They help extract greater value from data and allow businesses to work with a larger volume of data, using less time and resources to comb through data and maintain its quality. Data quality tools offer various features that can help sort data, identify issues and fix them for optimal business outcomes.

SEE: Learn more about the benefits of data quality software.

How do I choose the best data quality tool for my business?

The best data quality tool for your business depends on your unique requirements and priorities. As a first step, you need to clearly define what problems you are looking to solve with the data quality tool. This will help you identify the features you need in the software. At this point, you should consider defining your budget constraints to narrow down your options.

Most of the top data quality solutions offer a broad range of functionality, but they might offer specialized tools for some functions. In addition, some applications offer advanced tools but have a steep learning curve. You may have to choose between ease of use and functionality.

You might also want to consider the scalability of the software to ensure you don’t outgrow it as your business needs change. We recommend that you get a detailed demo of the software and use the free trial before committing to a solution.

Review methodology

We looked at a wide range of data quality solutions to compile this list of the best software. We assessed different parameters for each software, including its usability, scalability, standout features and customer support. We also considered customer testimonials and ratings as vital components of our overall assessment of each software.

It can be tough to manage data manually, and doing so can sometimes lead to errors or inefficiencies. Spreadsheets can get overly complex, and data quality can suffer. This has become a large enough stumbling block to the success of business intelligence and the Big Data industry that a number of data quality tools have…

It can be tough to manage data manually, and doing so can sometimes lead to errors or inefficiencies. Spreadsheets can get overly complex, and data quality can suffer. This has become a large enough stumbling block to the success of business intelligence and the Big Data industry that a number of data quality tools have…