AI and NASA Swift Map the Universe’s Farthest Gamma-Ray Bursts

-

by Anoop Singh

- 9

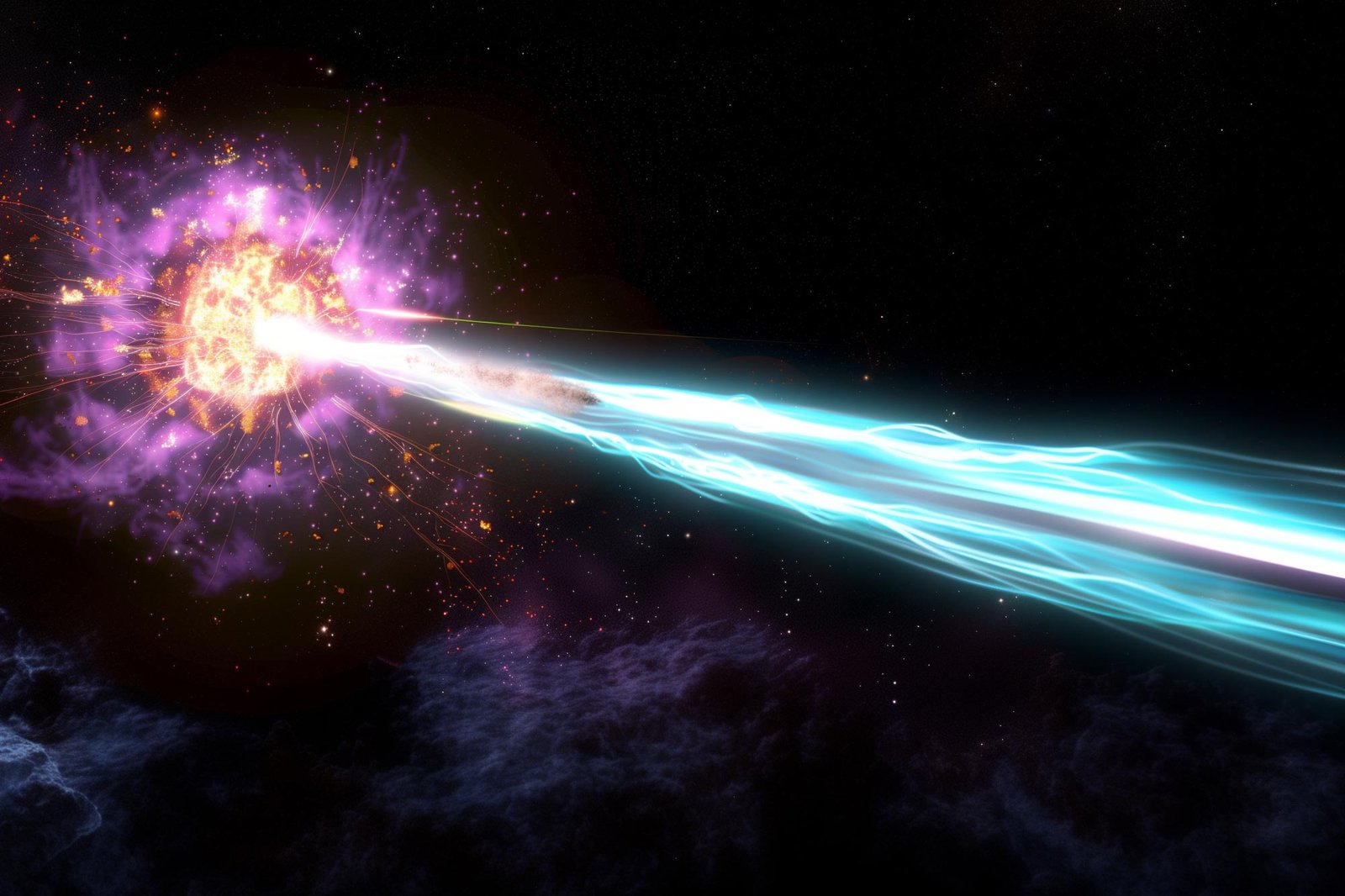

Scientists have applied advanced machine learning techniques to significantly enhance the precision of distance measurements for gamma-ray bursts (GRBs). By combining data from NASA’s Swift Observatory with machine learning models, they have enabled more accurate estimations of GRB distances, leading to improved understanding of cosmic phenomena and paving the way for future astronomical discoveries. Credit: SciTechDaily.com

Machine learning revolutionizes distance measurement in astronomy, providing precise estimates for gamma-ray bursts and aiding in cosmic exploration.

The advent of artificial intelligence (AI) has been hailed by many as a societal game-changer, as it opens a universe of possibilities to improve nearly every aspect of our lives.

Astronomers are now using AI, quite literally, to measure the expansion of our universe.

Pioneering Precision in Cosmic Measurements

Two recent studies led by Maria Dainotti, a visiting professor with UNLV’s Nevada Center for Astrophysics and assistant professor at the National Astronomical Observatory of Japan (NAOJ), incorporated multiple machine learning models to add a new level of precision to distance measurements for gamma-ray bursts (GRBs) – the most luminous and violent explosions in the universe.

In just a few seconds, GRBs release the same amount of energy our sun releases in its entire lifetime. Because they are so bright, GRBs can be observed at multiple distances – including at the edge of the visible universe – and aid astronomers in their quest to chase the oldest and most distant stars. But, due to the limits of current technology, only a small percentage of known GRBs have all of the observational characteristics needed to aid astronomers in calculating how far away they occurred.

Swift, illustrated here, is a collaboration between NASA’s Goddard Space Flight Center in Greenbelt, Maryland, Penn State in University Park, the Los Alamos National Laboratory in New Mexico, and Northrop Grumman Innovation Systems in Dulles, Virginia. Other partners include the University of Leicester and Mullard Space Science Laboratory in the United Kingdom, Brera Observatory in Italy, and the Italian Space Agency. Credit: NASA’s Goddard Space Flight Center/Chris Smith (KBRwyle)

Advancing Gamma-Ray Burst Research With AI

Dainotti and her teams combined GRB data from NASA’s Neil Gehrels Swift Observatory with multiple machine learning models to overcome the limitations of current observational technology and, more precisely, estimate the proximity of GRBs for which the distance is unknown. Because GRBs can be observed both far away and at relatively close distances, knowing where they occurred can help scientists understand how stars evolve over time and how many GRBs can occur in a given space and time.

“This research pushes forward the frontier in both gamma-ray astronomy and machine learning,” said Dainotti. “Follow-up research and innovation will help us achieve even more reliable results and enable us to answer some of the most pressing cosmological questions, including the earliest processes of our universe and how it has evolved over time.”

AI Boosts Limits of Deep-Space Observation

In one study, Dainotti and Aditya Narendra, a final-year doctoral student at Poland’s Jagiellonian University, used several machine learning methods to precisely measure the distance of GRBs observed by the space Swift UltraViolet/Optical Telescope (UVOT) and ground-based telescopes, including the Subaru Telescope. The measurements were based solely on other, non distance-related GRB properties. The research was published on May 23 in the Astrophysical Journal Letters.[1]

“The outcome of this study is so precise that we can determine using predicted distance the number of GRBs in a given volume and time (called the rate), which is very close to the actual observed estimates,” said Narendra.

Superlearner: Enhancing Predictive Power in Astronomy

Another study led by Dainotti and international collaborators has been successful in measuring GRB distance with machine learning using data from NASA’s Swift X-ray Telescope (XRT) afterglows from what are known as long GRBs. GRBs are believed to occur in different ways. Long GRBs happen when a massive star reaches the end of its life and explodes in a spectacular supernova. Another type, known as short GRBs, happens when the remnants of dead stars, such as neutron stars, merge gravitationally and collide with each other.

Dainotti says the novelty of this approach comes from using several machine-learning methods together to improve their collective predictive power. This method, called Superlearner, assigns each algorithm a weight whose values range from 0 to 1, with each weight corresponding to the predictive power of that singular method.

“The advantage of the Superlearner is that the final prediction is always more performant than the singular models,” said Dainotti. “Superlearner is also used to discard the algorithms which are the least predictive.”

This study, which was published on February 26 in The Astrophysical Journal, Supplement Series,[2] reliably estimates the distance of 154 long GRBs for which the distance is unknown and significantly boosts the population of known distances among this type of burst.

Answering Puzzling Questions on GRB Formation

A third study, published on February 21 in the Astrophysical Journal Letters[3] and led by Stanford University astrophysicist Vahé Petrosian and Dainotti, used Swift X-ray data to answer puzzling questions by showing that the GRB rate – at least at small relative distances – does not follow the rate of star formation.

“This opens the possibility that long GRBs at small distances may be generated not by a collapse of massive stars but rather by the fusion of very dense objects like neutron stars,” said Petrosian.

With support from NASA’s Swift Observatory Guest Investigator program (Cycle 19), Dainotti and her colleagues are now working to make the machine learning tools publicly available through an interactive web application.

References:

- “Gamma-Ray Bursts as Distance Indicators by a Statistical Learning Approach” by Maria Giovanna Dainotti, Aditya Narendra, Agnieszka Pollo, Vahé Petrosian, Malgorzata Bogdan, Kazunari Iwasaki, Jason Xavier Prochaska, Enrico Rinaldi and David Zhou, 24 May 2024, The Astrophysical Journal Letters.

DOI: 10.3847/2041-8213/ad4970 - “Inferring the Redshift of More than 150 GRBs with a Machine-learning Ensemble Model” by Maria Giovanna Dainotti, Elias Taira, Eric Wang, Elias Lehman, Aditya Narendra, Agnieszka Pollo, Grzegorz M. Madejski, Vahe Petrosian, Malgorzata Bogdan, Apratim Dey and Shubham Bhardwaj, 26 February 2024, The Astrophysical Journal Supplement Series.

DOI: 10.3847/1538-4365/ad1aaf - “Progenitors of Low-redshift Gamma-Ray Bursts” by Vahé Petrosian and Maria G. Dainotti, 21 February 2024, The Astrophysical Journal Letters.

DOI: 10.3847/2041-8213/ad2763

Scientists have applied advanced machine learning techniques to significantly enhance the precision of distance measurements for gamma-ray bursts (GRBs). By combining data from NASA’s Swift Observatory with machine learning models, they have enabled more accurate estimations of GRB distances, leading to improved understanding of cosmic phenomena and paving the way for future astronomical discoveries. Credit:…

Scientists have applied advanced machine learning techniques to significantly enhance the precision of distance measurements for gamma-ray bursts (GRBs). By combining data from NASA’s Swift Observatory with machine learning models, they have enabled more accurate estimations of GRB distances, leading to improved understanding of cosmic phenomena and paving the way for future astronomical discoveries. Credit:…